The Jetson line of modules brings accelerated AI to edge devices, allowing for truly innovative uses for IoT devices. The balena platform supports these modules (Nano, TX2, Xavier, Orin), enabling you to deploy, maintain, and update fleets of one to hundreds of thousands with ease. This is the third update of our series about how to use Jetson devices on the balena platform.

Hardware review

NVIDIA Jetson is a series of computing modules from NVIDIA designed for accelerating Machine Learning (ML) applications using relatively low power consumption. These boards use various versions of NVIDIA’s Tegra System on a Chip (SoC) that include an ARM CPU, GPU, and memory controller all-in-one.

The Jetson products are actually credit card sized plug-in modules intended to be integrated into end-user products and devices. In order to use a Jetson module, it must be attached to a carrier board which provides access to its various I/O. NVIDIA makes reference carrier boards known as Jetson Developer Kits, which are meant only for prototyping and development, not for use in a production environment. Third-party companies such as Seeed Studio, Advantech, Aetina and CTI make Jetson carrier boards that can be used for development and/or production.

Each balenaOS image targets a specific board using a specific module, with that combination identified by a “balena machine name”. Even if a certain board supports more than one module, only one image will work for that particular combination. For example, the jetson-nano image should only be used with the NVIDIA Jetson Nano Development board with the 4GB microSD Nano module.

Let’s take a quick look at the various Jetson boards and associated modules supported on balenaOS: (* = discontinued item)

Jetson Nano

The Nano was introduced in 2019 and is popular in hobbyist robotics due to its low price point. The module has a 128-core GPU and 4GB of RAM, with 16GB of onboard eMMC storage

NVIDIA sells a popular development board called the Jetson Nano Developer Kit which uses microSD for storage rather than eMMC. Originally introduced at $99 it now sells for $149. There was also a 2GB RAM version that is no longer available. All three variations are supported by balenaOS.

| Balena machine name | Carrier board | Jetson module |

|---|---|---|

jetson-nano | Jetson Nano Developer Kit | 4GB Nano with microSD |

jetson-nano-2gb-devkit | Jetson Nano Developer Kit | 2GB Nano with microSD* |

jetson-nano-emmc | Jetson Nano Developer Kit (B01) | Production Nano module with eMMC |

jn30b-nano | Auvidea JN30B carrier board | Production Nano module with eMMC |

Jetson TX2

The Jetson TX2 was announced in March 2017 as a compact module for use in low power situations. It has 256 GPU cores, twice that of the Jetson Nano. Currently NVIDIA offers two versions of the TX2 module: A 4GB RAM version with 16GB eMMC storage, and the TX2i for “industrial environments” with 8GB of RAM and 32GB of eMMC storage. (The Tx2i is not currently supported by balenaOS)

| Balena machine name | Carrier board | Jetson module(s) |

|---|---|---|

jetson-tx2 | Jetson TX2 Developer Kit* | TX2 module with 8GB RAM/32 GB eMMC* |

astro-tx2 | CTI Astro Carrier | TX2 4GB RAM/16 eMMC |

orbitty-tx2 | CTI Orbitty Carrier | TX2 4GB RAM/16 eMMC |

spacely-tx2 | CTI Spacely Carrier | TX2 4GB RAM/16 eMMC |

The TX2 NX was launched in 2021 and is more compact than the TX2, sharing the same smaller form-factor as the Jetson Nano. With up to 2.5X the performance of the Nano via 256 cores, it is meant to sit performance-wise between the Jetson Nano and TX2. The TX2 NX is supported by balenaOS using the following boards:

| Balena machine name | Carrier board | Jetson module |

|---|---|---|

jetson-tx2-nx-devkit | Jetson Xavier NX Developer Kit* | TX2 NX with 4GB RAM and 16GB eMMC |

photon-tx2-nx | CTI Photon AI Camera Platform | TX2 NX with 4GB RAM and 16GB eMMC |

Jetson Xavier NX

NVIDIA unveiled a stripped down version of its high-end Jetson Xavier AGX module in 2019 that’s more powerful than the TX2 at 384 cores, while shrinking the size of the module to match the Jetson Nano. There’s a version with 16GB of RAM and one with 8GB of RAM, both offering 16GB of eMMC storage.

| Balena machine name | Carrier board | Jetson module |

|---|---|---|

jetson-xavier-nx-devkit-emmc | Jetson Xavier NX Developer Kit* | Jetson Xavier NX or Jetson Xavier NX 16GB |

jetson-xavier-nx-devkit | Jetson Xavier NX Developer Kit* | Jetson Xavier NX SD card module for dev kit only* |

cnx100-xavier-nx | Auvidea CNX100 | Jetson Xavier NX or Jetson Xavier NX 16GB |

photon-xavier-nx | CTI Photon Xavier NX | Jetson Xavier NX or Jetson Xavier NX 16GB |

Jetson AGX Xavier

The AGX Xavier has 512 cores and comes with 64GB/32GB of RAM as well as 64GB/32GB of eMMC storage. It is touted as having “more than 20X the performance and 10X the energy efficiency of the Jetson TX2”

| Balena machine name | Carrier board | Jetson module |

|---|---|---|

jetson-xavier | Jetson AGX Xavier Developer Kit | Jetson AGX Xavier module (four variations of RAM/eMMC) |

Jetson Orin

In 2022 NVIDIA released its most advanced Jetson module yet, the Orin series, starting with the AGX Orin featuring 2,048 GPU cores. It has 6x the processing power of the AGX Xavier and is designed for advanced robotics and AI edge applications such as manufacturing, logistics, retail, healthcare, and others. Balena is pleased to support this latest NVIDIA Jetson board!

| Balena machine name | Carrier board | Jetson module |

|---|---|---|

jetson-agx-orin-devkit | Jetson AGX Orin Developer Kit | Jetson AGX Orin Module (32GB RAM/64GB eMMC) |

jetson-orin-nano-devkit-nvme | Jetson Orin Nano Developer Kit | Jetson Orin Nano 8GB |

jetson-orin-nx-xavier-nx-devkit | Jetson Orin NX 16GB | Xavier NX Devkit NVME |

jetson-orin-nx-seeed-j4012 | Seeed J4012 | Jetson Orin NX 16GB RAM |

Using carrier boards not in this list

If you want to use a carrier board that is not listed above, first try testing the closest balenaOS image that is a match for the Jetson module being used. As long as the module corresponds to an image, it should at least boot. Depending on how closely the carrier board matches the reference NVIDIA board, other features such as Ethernet, WiFi, etc. may work as well. If a particular feature does not work, you’ll need to obtain the board’s device tree file (DTB). For the TX2, TX2 NX, Nano, and AGX Orin you can copy the device-tree blob in /mnt/sysroot/active/current/boot/ and select it in the dashboard configuration in order to test the DTB. (The AGX Xavier and Xavier NX don’t load DTBs dynamically from balenaOS so they can’t be tested in this manner.) This is not a permanent solution since it would be overwritten with the default DTB by a subsequent host OS update. For permanent inclusion in a future balenaOS version, PR the device-tree blob to the balena-jetson repository.

NVIDIA software

The board support package (also known as BSP) for the Jetson series is named L4T, which stands for Linux4Tegra. It includes a Linux Kernel, a UEFI based bootloader, NVIDIA drivers, flashing utilities, sample filesystem based on Ubuntu 20.04, and more for the Jetson platform. These drivers can be downloaded on their own, or as part of a larger bundle of Jetson software known as JetPack. In addition to the L4T package, JetPack includes deep learning tools such as TensorRT, cuDNN, CUDA tools and more.

As of this writing, the current version of L4T is 35.1 and the latest version of JetPack is 5.0.2. This release supports Jetson AGX Orin 32GB production module and Jetson AGX Orin Developer Kit, Jetson AGX Xavier series and Jetson Xavier NX series modules, as well as Jetson AGX Xavier Developer Kit and Jetson Xavier NX Developer Kit. (Although as of this writing, balenaOS does not yet support L4T 35.1 on the AGX Xavier and Xavier NX)

Jetson Nano and Jetson TX2 are only supported through JetPack 4 versions. (TX2 modules sold after November 2022 must use JetPack 4.6.3/L4T 32.7.3 due to new memory configurations)

There are a few ways the JetPack can be installed on a Jetson board:

- For the Jetson Nano and Xavier NX Developer Kits, NVIDIA provides SD card images.

- JetPack is part of The NVIDIA SDK Manager, which also includes software for setting up a development environment.

- JetPack can also be installed using Debian packages

Using the SD card images or installing JetPack will also install a desktop version of Ubuntu 18 on your device. Since we want to use the minimal, yocto-based balenaOS on our Jetson device, we won’t use either of those tools. It’s possible to extract the L4T drivers from the SDK Manager, but there are easier ways to obtain the drivers as discussed below.

Flashing Jetson devices

Once you have created a fleet in balena and downloaded the appropriate balenaOS image from the dashboard, you’ll need to get that image onto your Jetson device so it can boot from it. Simply follow the instructions provided when adding a new device to your fleet. In many cases, you’ll be directed to our custom flashing utility called jetson-flash.

Jetson flash invokes NVIDIA’s proprietary software to properly partition the eMMC and place the required balenaOS software in the necessary location to make it bootable. Even Jetson devices without eMMC may require use of the flashing tool to make balenaOS bootable. This is because newer versions of JetPack use a tiny bit of onboard flash memory (called QSPI) for the bootloader instead of the SD card. The jetson-flash tool can write to this memory and allow a Jetson to boot from eMMC or a microSD card as appropriate.

Using NVIDIA GPUs with Docker

Being platform and hardware-agnostic, Docker containers do not natively support NVIDIA GPUs. One workaround is to install all of the necessary NVIDIA drivers inside a container. A downside to this solution is that the version of the host driver must exactly match the version of the driver installed in the container, which reduces container portability.

To address this, NVIDIA has released a number of custom tools over the years to allow containers to access the GPU drivers on the host. These tools include NVIDIA Docker, NVIDIA Docker 2, the NVIDIA Container Runtime, and the most recent tool: NVIDIA Container Toolkit. Most of the base images provided by NVIDIA require one of these tools. (When you see examples that contain --runtime=nvidia or --gpus all in the command, it indicates that the image requires one of these tools.)

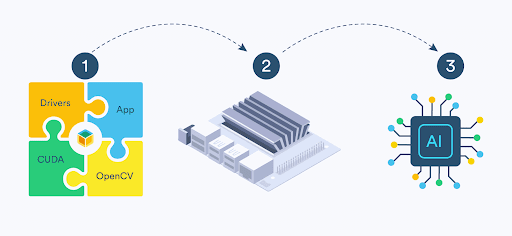

However, on the minimalist balenaOS designed for running containers on small devices, you won’t find all of these drivers on the host, mainly due to their large size. (You will however find the basic BSP components needed to boot the board and some other components from our upstream Yocto provider, meta-tegra). So the NVIDIA Container Toolkit (and any image that requires it) is not an option. This leads us back to the option mentioned earlier for using containers on balenaOS that requires access to the NVIDIA GPU: Install all of the necessary NVIDIA drivers into the container and make sure they match the BSP version on the host OS.

balenaOS on Jetson devices

Each release of balenaOS is built using a specific version of the NVIDIA L4T package (BSP). To find out the L4T version, you can run the command uname -a on the HostOS:

Or, if you don’t have access to a device, follow these steps:

1. Go to https://github.com/balena-os/balena-jetson

2. From the branch dropdown, click on the “Tags” tab, and select a balenaOS version.

3. Look for the L4T version in the layers/meta-balena-jetson/recipes-bsp/tegra-binaries folder

Now that we know which version of L4T is running on the host, we can install the same version in our containers that require GPU access. (If there is a small version mismatch, apps may still work, but the larger the mismatch, the more likely difficult-to-find issues and/or glitches will occur.)

balenaOS includes a feature (see “Contracts” below) to help you make sure that any differences between the host and container drivers do not negatively affect your applications. First, let’s go through an example of how to load the NVIDIA drivers in our container.

Sample containers

All of our NVIDIA base images for the Jetson family of devices include NVIDIA package repositories in their apt sources list. This means that installing CUDA is as simple as issuing the following command or including it in your Dockerfile:apt-get install -y nvidia-l4t-cuda (You should first perform an apt-get update.)

You can use the apt-cache search command to find a list of packages matching a keyword. For instance, apt-cache search cuda returns a list of all the available packages that contain the word “cuda” in their name or description. To view more details about a package, use apt-cache show and then the name of the package.

In our previous tutorial, we provided a sample app for the Jetson Nano that demonstrated how to install and run CUDA and OpenCV on a Jetson Nano. We’ve expanded that example repository to include examples for the Jetson TX2, Xavier, and Orin as well. You can use the button below to install both containers on your balena device:

You’ll be prompted to create a free balenaCloud account if you don’t already have one, and then the application will begin building in the background. Be sure to choose the appropriate “Default Device Type” from the drop down for the type of Jetson board you are using. Then click “add device” and follow the instructions along the right side of the screen to flash your device.

You can also use the balena CLI and push the application to balenaCloud manually. You’ll first need to clone this repository to your computer. For detailed steps on using this method, check out our getting started tutorial.

Once your application has finished building (It could take a while!) and your Jetson device is online, you should see the application’s containers in a running state on the dashboard:

At this point, make sure you have a monitor plugged into the Jetson’s HDMI port and that it is powered on.

CUDA examples

CUDA is a parallel computing platform and programming model to help developers more easily harness the computational power of NVIDIA GPUs. The cuda container has some sample programs that use CUDA for real time graphics. To run these samples, you can either SSH into the app container using the balena CLI with balena ssh < ip address > cuda (where < ip address > is the IP address of your Jetson), or use the terminal built into the dashboard, selecting the cuda container.

First, start the X11 window system which will provide graphic support on the display attached to the Jetson:

X &

(Note the & causes the process to run in the background and return our prompt, although you may need to hit enter more than once to get the prompt back.)

The most visually impressive demo is called “smokeParticles” and can be run by typing the following command:

./smokeParticles

It displays a ball in 3D space with trailing smoke. At first glance it may not seem that impressive until you realize that it’s not a video playback, but rather a graphic generated in real time. The Jetson’s GPU is calculating the smoke particles (and sometimes their reflection on the “floor”) on the fly. To stop the demo you can hit CTRL + C. Below are the commands to run a few other demos, some of which just return information to the terminal without generating graphics on the display.

./deviceQuery

./simpleTexture3D

./simpleGL

./postProcessGL

You can use the Dockerfile in our CUDA sample as a template for building your own containers that may need CUDA support. (Installing and building the cuda samples can be removed to save space and build time.)

OpenCV examples

OpenCV (Open Source Computer Vision Library) is an open source computer vision and machine learning software library that can take advantage of NVIDIA GPUs using CUDA. Let’s walk through some noteworthy aspects of the opencv Dockerfile.

Since OpenCV will be accelerated by the GPU, it has the same CUDA components as the cuda container.In addition, you’ll notice a number of other utilities and prerequisites for OpenCV are installed in the Dockerfile. We then download (using wget) zip files for version 4.5.1 of OpenCV and build it using CMake. Building OpenCV from source in this manner is the recommended way of installing OpenCV. The benefits are that it will be optimized for our particular system and we can select the exact build options we need. (The build options are in the long line with all of the -D flags.) The downside to building OpenCV from scratch is deciding which options to include and the time it takes to run the build. There are also file size considerations due to all of the extra packages that usually need to be installed.

Our example solves the size problem by using a multistage build. Notice that after we install many packages and build OpenCV we basically start over with a new, clean base image. Multi stage allows us to simply copy over the OpenCV runtime files we built in the first stage, leaving behind all of the extra stuff needed to perform the build.

To see the OpenCV demos, you can either SSH into the app container using the balena CLI with balena ssh <ip address> opencv (where <ip address> is the ip address of your Jetson), or use the terminal built into the dashboard, selecting the opencv container. (If you recently were running the CUDA example, you may need to restart the containers to release the X display)

Enter the following command to direct our output to the first display:export DISPLAY=:0

Now enter this command to start the X11 window system in the background:X &

Finally, type one of the following lines to see the samples on the display:

./example_ximgproc_fourier_descriptors_demo

./example_ximgproc_paillou_demo corridor.jpg

If all goes well, you will see example images on the monitor plugged into the Jetson Nano’s HDMI port. While these examples are not particularly exciting, they are meant to confirm that OpenCV is indeed installed.

Manage driver discrepancies with contracts

In our previous example, we saw the need to ensure that NVIDIA drivers in a container match the driver versions on the host. As new versions of the host OS are released with updated versions of the drivers, it may seem a bit cumbersome to keep track of any discrepancies. Balena supervisors (>= version 10.6.17) include a feature called “contracts” that can help in this situation.

Container contracts are defined in a contract.yml file and must be placed in the root of the build context for a service. When deploying a release, if the contract requirements are not met, the release will fail, and all services on the device will remain on their current release. Let’s see what a container contract for our previous example might look like:

type: "sw.container"

slug: "enforce-l4t"

name: "Enforcel4t requirements"

requires:

- type: "sw.l4t"

version: "32.7.1"

Place a copy of this file named contract.yml in both the cuda and opencv folders then re-push the application. Now, if the version of L4T in both the host and container don’t match, the release will not be deployed. Since they currently match, you won’t notice any change. However, if you tried pushing to a device running an older OS for example 2.47.1+rev3 which is based on L4T 32.3.1, the release won’t deploy.

If you are using a multi-container application and you want to make sure that certain containers are deployed even if another container contract fails, you can use optional containers. Take a look at this example using optional containers which get deployed based on the L4T version that is running on each particular device.

Going further

If you’d like to learn more about using the Jetson Devices for AI projects on balenaOS, check out the examples below.

- OpenDataCam – Quantify and track moving objects with live video analysis. Updated in 2022 and now with Orin support!

- Build an AI-driven object detection algorithm with balenaOS and alwaysAI

- Another example repository for getting started with the Jetson on balena

If you have any questions, you can always find us on our forums, on Twitter, Instagram, or on Facebook.

Hi @andrewnhem, thanks for making available this supportive blog post. I am having an issue to use L4t-pytorch nvidia container in Dockerfile that I push to BalenaOS of my Nvidia device. For my app, I need to use GPU based Pytorch. Therefore, I need cuda toolkit running on the device. However, it seems that there is an issue related to that on BalenaOS. I guess there are lack of required Nvidia tools in it. Can you please guide me on this?

Have there been any updates to the process for deploying CUDA and OpenCV to a Jetson TX2 (or even for the Nano)? I’m unable to make this procedure work with my Jetson TX2 and CTI Astro carrier board. I suspect I have a mismatch between the Nvidia drivers in balenaOS and the version of L4T and/or CUDA available in the balenaOS repository.

What versions are you trying to install? Thanks!